[AINews] Anthropic launches the Model Context Protocol • ButtondownTwitterTwitter

Twitter and Reddit Recap

The section provides a recap of discussions on Twitter and Reddit related to AI developments and initiatives. It covers topics such as the launch of Anthropic's Model Context Protocol (MCP), Claude's AI integration capabilities, upcoming NeurIPS events, Amazon's collaboration with Anthropic, open-source initiatives like NuminaMath dataset licensing, and the release of models like OuteTTS-0.2-500M. Additionally, it discusses AI capability tests, model performance comparisons, and the development of tools like SmolLM2 and Optillm. These platforms serve as forums for insights, critiques, and advancements in the AI community.

AI Discord Recap

Theme 1: AI Model Shuffles Stir Up User Communities

- Users on the AI Discord are experiencing disruptions due to changes like Cursor's removal of long context mode and Qwen 2.5 Coder's performance variability. There is praise for GPT-4o's impressive performance.

Theme 2: AI Tools and Platforms Ride the Rollercoaster

- Challenges are seen with LM Studio's model search limitation and rate limits on the OpenRouter API, while discussions compare tools like Aider, Cursor, and Windsurf.

Theme 3: Fine-Tuners Face Trials and Tribulations

- Issues arise with fine-tuning Command R models and using embeddings on Windows. There is a debate about fine-tuning models with large PDFs.

Theme 4: Communities Collaborate, Commiserate, and Celebrate

- The Solveit community maps global user locations, while enthusiasts join weekly study groups for prompt hacking. Users bond over shared challenges and experiences with Perplexity Pro glitches.

LLM Agents (Berkeley MOOC) Discord

Compute Resources Deadline Today: Teams must submit the GPU/CPU Compute Resources Form by the end of today via provided link, ensuring access to necessary resources for upcoming activities. Late submissions may affect resource allocation and hinder project progress. Early submission is advised for seamless coordination and optimal utilization of compute provisions.

Axolotl AI Discord

- PDF Fine-Tuning Inquiry: A member inquired about generating an instruction dataset for fine-tuning a model using an 80-page PDF containing company regulations and internal data. They wondered if the document's structure with titles and subtitles could aid in processing with LangChain.

- Challenges in PDF Data Extraction: Another member suggested checking how much information could be extracted from the PDF, noting that some documents, especially those with tables or diagrams, are harder to read.

- Extracting relevant data from PDFs varies significantly depending on their layout and complexity.

- RAG vs Fine-Tuning Debate: A member shared that while fine-tuning a model with PDF data is possible, using Retrieval-Augmented Generation (RAG) would likely yield better results. This method provides an enhanced approach for integrating external data into model performance.

Solveit and SIWC Course Announcements

The announcements section covers the launch of the How To Solve It With Code (SIWC) course and instructions for participants. It introduces the new Dialogue Engineering method and the Solveit platform. Participants are advised to log in to Solveit platform for essential course details and to maintain confidentiality within the Discord group. Ongoing communication regarding the course will take place on Discord.

Using LLMs for Q&A Pairs and RAG Strategy

- Using LLMs for Q&A Pairs: Members discussed feeding text chunks to a language model to generate Q&A pairs, suggesting RAG as an alternative strategy. Skepticism was expressed about the need for a dataset.

- RAG Strategy for Hybrid Retrieval: A recommendation was made for using a RAG approach with a hybrid retrieval strategy, citing successful experience with 500 PDFs in a specific domain including chemical R&D. Concerns were raised about the object type in Mojo being outdated and in need of significant rework.

LM Studio Hardware Discussion

- LM Studio works with various GPUs: Users discussed the compatibility of LM Studio with different GPUs, including an inquiry about the RX 5600 XT and advice on PSU wattage for a build with 3090 and 5800x3D.

- High VRAM cards for demanding applications: The conversation highlighted the need for GPUs with high VRAM, with recommendations for at least 16GB and the 3090 model.

- Soaring GPU prices: Users expressed frustration over the increasing prices of GPUs, especially for older series like Pascal.

- PCIe impact on performance: The impact of PCIe revisions on model loading times versus inference speeds was discussed, emphasizing that PCIe 3.0 does not significantly affect inference speed.

LLM as Judge

- Configuring Command R for complete answers: When using the Command R model, occasionally the output stops at random tokens due to hitting the max_output_token limit. Strategies to configure the model parameters for better outputs were requested.

- Cohere API returns incomplete results: Issues with the Cohere API, receiving responses with missing content. Integrations with Claude and ChatGPT worked without issues.

- Integrating Cohere API in Vercel deployment: Difficulties deploying a React app using the Cohere API on Vercel, resulting in 500 errors related to client instantiation.

- Feedback on batching + LLM approach: Seeking feedback on a batching + LLM approach and challenges with hallucination using the command-r-plus model for identifying sensitive fields.

- Exploring multi-agent setups: Inquiry about Langchain involvement and trying out a massively multi-agent setup, questioning the role of the 'judge' in passing or failing after analysis.

Discussion on Various AI Development Topics

Several discussions on different AI development topics were held in various channels. These discussions ranged from custom reference models and full-finetune recipes in Torchtune to synthetic data generation and foundation model development in DSPy. Additionally, Lumigator for LLM selection and ethical AI development vision were covered in Mozilla AI. Lastly, API key generation issues were addressed in AI21 Labs.

FAQ

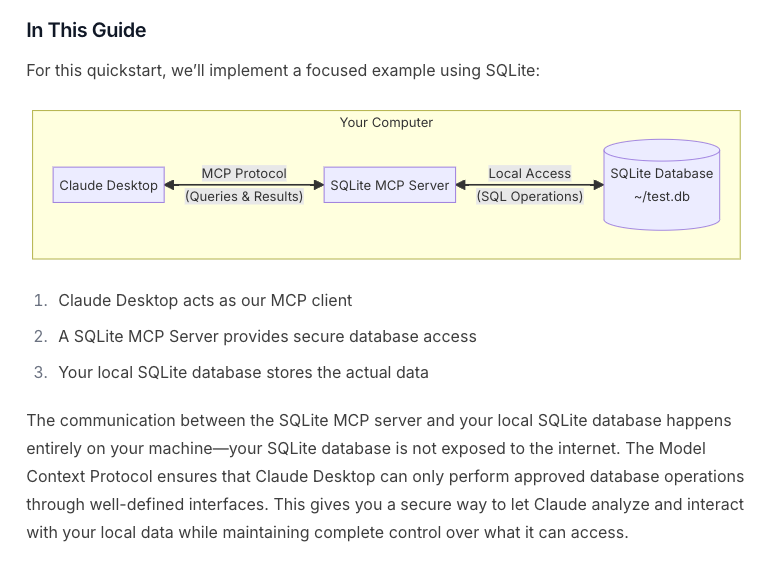

Q: What is the purpose of Anthropic's Model Context Protocol (MCP)?

A: The purpose of Anthropic's Model Context Protocol (MCP) is to provide a framework for AI model development and deployment.

Q: Can you explain the key features of tools like SmolLM2 and Optillm in the AI community?

A: SmolLM2 and Optillm are tools developed in the AI community to assist in model development, testing, or performance optimization.

Q: What are some challenges faced by users with LM Studio's model search limitation?

A: Users have reported challenges with LM Studio's model search limitation, which may restrict their ability to find the most suitable models for their needs.

Q: How do fine-tuners navigate issues when fine-tuning Command R models and using embeddings on Windows?

A: Fine-tuners navigate issues when fine-tuning Command R models and using embeddings on Windows by seeking solutions and workarounds within the AI community.

Q: What is the significance of using a Retrieval-Augmented Generation (RAG) approach over fine-tuning models with large PDFs?

A: Using a Retrieval-Augmented Generation (RAG) approach is considered more beneficial than fine-tuning models with large PDFs as it enhances the integration of external data into model performance.

Q: How do users address the challenges posed by soaring GPU prices for demanding AI applications?

A: Users address the challenges posed by soaring GPU prices for demanding AI applications by discussing alternative GPU options and sharing recommendations within the community.

Q: What strategies are recommended to configure model parameters for better outputs when using the Command R model?

A: Strategies recommended to configure model parameters for better outputs when using the Command R model include adjusting parameters to avoid output limitations and improve the overall performance.

Q: What difficulties have users encountered when integrating the Cohere API in Vercel deployment?

A: Users have encountered difficulties when integrating the Cohere API in Vercel deployment, leading to 500 errors related to client instantiation.

Q: How do users explore multi-agent setups and the role of the 'judge' in passing or failing after analysis?

A: Users explore multi-agent setups and the role of the 'judge' in passing or failing after analysis by experimenting with Langchain involvement and trying out massively multi-agent setups to understand the dynamics and decision-making processes.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!