[AINews] DeepSeek-R1 claims to beat o1-preview AND will be open sourced • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recaps

AI Model Development and Research Tools

Deep AI Models Discussions

Discord Highlights

Innovations in AI and Design

Aider Setup, Fedora KDE, AI Training Checkpoints, and SageAttention2

LM Studio Hardware Discussion

WebGPU and Metal Compatibility

Cohere General Discussions

Torchtune Discussions and Updates

Live Event Announcement and Demo

AI Twitter and Reddit Recaps

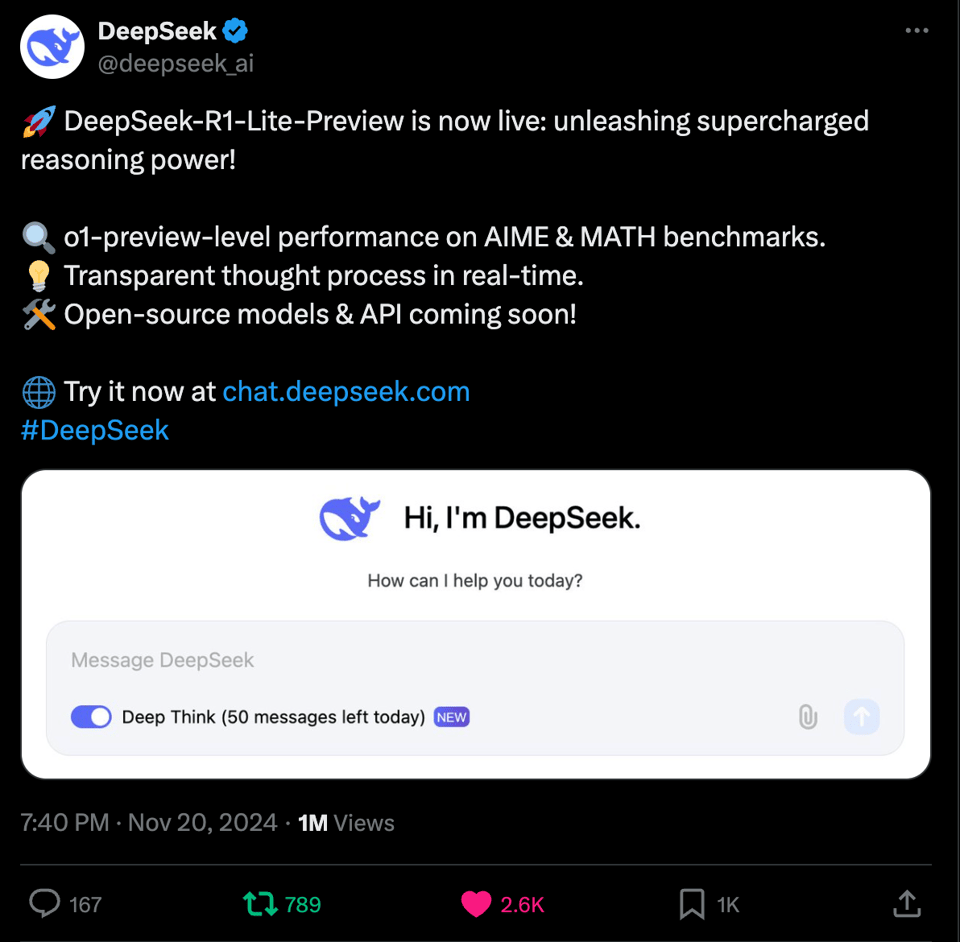

The AI Twitter recap covers various updates including NVIDIA's financial success, DeepSeek-R1-Lite-Preview launch and evaluation, AlphaQubit's collaboration with Google, GPT-4o's creative enhancements, LangChain and LlamaIndex developments, and fun AI-related memes. On the other hand, the AI Reddit recap focuses on DeepSeek-R1-Lite matching o1-preview in math benchmarks, the release of Chinese AI startup StepFun's 1 trillion parameter MOE model, and the discussion around sophisticated open-source LLM tools like Research Assistant and Memory Frameworks.

AI Model Development and Research Tools

The section discusses various AI research tools and developments. It showcases an AI Research Assistant that conducts web research and provides detailed documents + summaries. The Assistant uses Python, Ollama, and local LLMs. Users had varying success with different LLMs. Suggestions were made to improve the tool, like adding OpenAI API support. Discussions highlighted the importance of finding and summarizing real research content accurately. Another part compares LLM agent memory frameworks and their implementations, focusing on Letta, Memoripy, and Zep. It also mentions the use of vector-based memory systems. Moreover, the section explores hardware optimization for AI, especially using Raspberry Pi with AMD GPU acceleration for running Large Language Models. Lastly, an in-browser site builder powered by Qwen2.5-Coder is presented, using WebGPU and OnnxRuntime-Web.

Deep AI Models Discussions

Various discord channels are abuzz with updates and discussions on the latest advancements in deep AI models and technologies. From the deployment of custom AI models on Hugging Face to the release of new papers addressing AI security and insights, the community is actively exploring ways to enhance AI capabilities. The Automated AI Researcher, Semantic Search Challenges with Ada 002, LangGraph learning initiatives, NeurIPS NLP evaluations, and much more are being discussed, reflecting the dynamic landscape of AI research and development.

Discord Highlights

- API Usage Challenges: A member reported frustrations with available API options.

- Model Option Clarification: Discussions on the 4o model version.

- High Temperature Performance: Considerations on model responsiveness at higher temperatures.

- Beta Access to o1: Excitement over beta access granted by NH.

- Delimiter Deployment in Prompts: Using delimiters to structure prompts for better responses.

- Adaptive Batching Optimizes GPU Usage: Utilizing adaptive batching to prevent errors during training.

- Enhancing DPO Loss Structure: Concerns on the TRL code structure regarding DPO modifications.

- SageAttention Accelerates Inference: Achieving speedups compared to other methods.

- Benchmarking sdpa vs. Naive sdpa: Evaluating performance differences.

- Nitro Subscription Affects Server Boosts: Impact of cancelling Nitro subscription on server benefits.

- Async functions awaitable in Mojo sync functions: Puzzlement over async function handling in Mojo sync functions.

- Inquiry about Mojo library repository: Curiosity about the availability of Mojo libraries similar to pip.

- Moonshine ASR Model Tested with Max: Testing Moonshine ASR model performance.

- Seeking Performance Improvements in Mojo: Request for assistance in improving performance in Mojo programs.

- Tencent Hunyuan Model Fine Tuning: Inquiries about fine-tuning the Tencent Hunyuan model.

- Bits and Bytes on MI300X: Experiences using Bits and Bytes on the MI300X system.

- Axolotl Collab Notebooks for Continual Pretraining of LLaMA: Enquiries about collab notebooks for continual pretraining.

- Appreciation for Semantic Router: Recognition of Semantic Router for performance in classification tasks.

- Juan seeks help with multimodal challenges: Inquiries about multimodal challenges.

- Semantics Router as a Benchmark: Suggestion for the Semantic Router as a baseline in classification tasks.

- Interactions about the new UI in OpenInterpreter: Mixed feelings on the new UI and rate limit configurations.

- Discussion on Intel AMA Session: Announcement of an upcoming AMA session with Intel.

- Refact.AI Live Demo on Autonomous Agents: Hosting a live demo on autonomous agents by Refact.AI.

Innovations in AI and Design

Generative design tools like Fusion 360 are available for free through educational licenses, revolutionizing design techniques and making them accessible to students. Custom AI models can now be deployed using handler files on Hugging Face, enhancing customization for AI projects. A new paper by AI researchers at Redhat/IBM discusses security implications of publicly available AI models, proposing strategies to enhance security. An Automated AI Researcher has been developed to generate research documents using web scraping, highlighting the potential of AI in simplifying research. In another section, innovations in AI applications blended with artistic flair are discussed through a psychedelic-themed video and prompting techniques in AIs. The community engages in discussions about GPU pricing, usage concerns, and channel etiquette in a reading group. Challenges with semantic search, frustration with the Evaluate Library, and a need for faster alternatives to Pandas are highlighted in the NLP section. Updates on AI models, model performances, and community engagement are shared, along with shared interests in model testing and performance. Various interactions in different Discord channels also cover topics like model releases, training dynamics, reinforcement learning trends, and model evaluation bottlenecks. Lastly, advances in vision support, multi-GPU training, internship opportunities, data quality in NLP tasks, and training Llama models are discussed, underscoring the importance of quality data and effective training strategies.

Aider Setup, Fedora KDE, AI Training Checkpoints, and SageAttention2

This section delves into diverse topics including Linux distributions, AI training preferences, and innovations in AI technology. A member discusses the popularity of NixOS among friends, leading to banter about different Linux distros. Another member praises Fedora KDE for its interface resembling Windows, sparking excitement in the group. The conversation shifts to AI training, with a member sharing their checkpoint choice and inviting opinions. In another discussion, users troubleshoot exporting Llama 3.1 models for local use, along with queries about pre-tokenized datasets and checkpoint management. Meanwhile, the introduction of SageAttention2, a method for 4-bit attention optimization, is explored. The SageAttention2 GitHub repository is highlighted, showcasing significant speedups compared to existing models. The limitations of SageAttention2 and its focus on inference over training are noted. Lastly, the section emphasizes the importance of rigorous evaluation practices in AI model development, spotlighting Marius Hobbhahn's advocacy for evaluation science in the AI community.

LM Studio Hardware Discussion

A member reported challenges running Qwen models on a virtual machine without a GPU, resulting in limited performance. Suggestions were made for the required RAM and GPU for DeepSeek v2.5 Lite. Discussions covered guidance on building workstations, GPU selection, and the differences between fine-tuning and running models efficiently. Users explored the usage of cloud-based solutions and shared recommendations for GPU utilization. The conversation emphasized the importance of system requirements, hardware optimization, and exploring alternative solutions for optimal performance.

WebGPU and Metal Compatibility

A member in the 'webgpu' channel highlighted Philip Turner's repository featuring a Metal GEMM implementation but mentioned readability concerns. They discussed WebGPU's issues with MMA intrinsics and questioned if improvements have been made regarding compilers. Additionally, a technique optimizing register utilization was shared, focusing on pointers over array access. Concerns were raised about performance regressions in Dawn versions post-Chrome 130, with improvements noted in Chrome 131. The discussion also delved into disassembling AGX machine code tools and their relevance in measuring register utilization in compiled code.

Cohere General Discussions

403 Errors Indicate API Issues: Multiple members discussed encountering 403 errors due to invalid API keys when trying to access certain functionalities. Inquiry about Free Tier API Limitations: A member attempted to upgrade from the free tier to a production key but faced CORS errors. Collective Learning with Python: A participant shared their involvement in '30 Days of Python,' sparking a discussion on ongoing projects within the community.

Torchtune Discussions and Updates

Discussions in the Torchtune channel involved topics like implementing adaptive batching for optimal GPU utilization, evaluating changes in DPO loss structure, and preferences for standard methods over new approaches. Members also discussed the impacts of cancelling Nitro subscriptions, challenges in TRL code complexity, and simplifying the DPO recipe for future development. Additionally, the community explored SageAttention's impressive speedups, Moonshine ASR model performance, and building AI agents with LlamaIndex. The Modular channel covered topics like async functions in Mojo, SASS assembler intentions, and a call for presenters at FOSDEM AI DevRoom. Lastly, OpenInterpreter discussed new UI feedback, rate limit issues, interpreter design, and future UI configurations.

Live Event Announcement and Demo

Join the live demo and conversation to dive deeper into Refact.AI's developments. An event has been announced where Refact.AI members will discuss their latest technology. Participants are encouraged to engage with the live demo and conversation related to the autonomous agent.

FAQ

Q: What is the importance of API usage challenges discussed in the essai?

A: The importance of API usage challenges is highlighted as members reported frustrations with available API options, facing 403 errors due to invalid keys and CORS errors when trying to upgrade from the free tier to a production key.

Q: Can you explain the discussions around model options and version clarifications?

A: Discussions on model options and clarifications involve conversations about different model versions, like the 4o model version, and seeking beta access to certain models like o1. Members share their experiences and seek clarity on the version differences.

Q: What is the significance of adaptive batching for GPU optimization in the AI research tools section?

A: The significance of adaptive batching is emphasized as a technique to optimize GPU usage and prevent errors during training, showcasing the importance of efficient resource utilization in AI model development.

Q: What are the key takeaways from the discussions on deep AI models in the Discord channels?

A: Key takeaways from the deep AI model discussions include advancements in deployment of custom AI models on platforms like Hugging Face, the community's engagement with new papers addressing AI security and insights, and the exploration of various AI model training dynamics and performance evaluations.

Q: What insights can be gained from the discussions on hardware optimization for AI, specifically using Raspberry Pi with AMD GPU acceleration?

A: Insights from the hardware optimization discussions highlight the utilization of Raspberry Pi with AMD GPU acceleration for running Large Language Models, showcasing alternative solutions for optimizing system performance in AI applications.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!