[AINews] Google wakes up: Gemini 2.0 et al • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

Windsurf Wave 1 Enhances Developer Tools

Gemini 2.0 Flash Launch

Performance Boost with Named Results

OpenAI Discussion Forums

Training and Model Development Updates

Stability.ai and Stable Diffusion

Choosing Discord Names and Open Hangouts

LLM Agents (Berkeley MOOC) MOOC Questions

LLM Agents (Berkeley MOOC) ▷ #mooc-lecture-discussion (2 messages)

Continuing Content

AI Twitter and Reddit Recap

The section provides a recap of recent discussions on AI from Twitter and Reddit platforms. Major highlights include the launch of Google's Gemini 2.0 Flash model, integration of ChatGPT with Apple, Claude 3.5 Sonnet's performance, and Google's advancements. The section also covers industry dynamics, research updates from NeurIPS conference, humorous takes on AI models, Gemini 2.0 Flash achievements on SWE-Bench, and comparisons with other models. Additionally, it includes discussions from the LocalLlama subreddit on Gemini 2.0 Flash's performance, advancements, API usage, market expectations, and user experiences with the experimental version. Overall, the section offers a comprehensive overview of key developments and analyses in the AI field from Twitter and Reddit sources.

Windsurf Wave 1 Enhances Developer Tools

Windsurf Wave 1 has officially launched, integrating major autonomy tools like Cascade Memories and automated terminal commands. The update also boosts image input capabilities and supports development environments such as WSL and devcontainers. Users have expressed excitement over the new features, highlighting the integration of Cascade Memories as a significant improvement. The launch aims to streamline developer workflows by automating terminal commands and supporting diverse development environments.

Gemini 2.0 Flash Launch

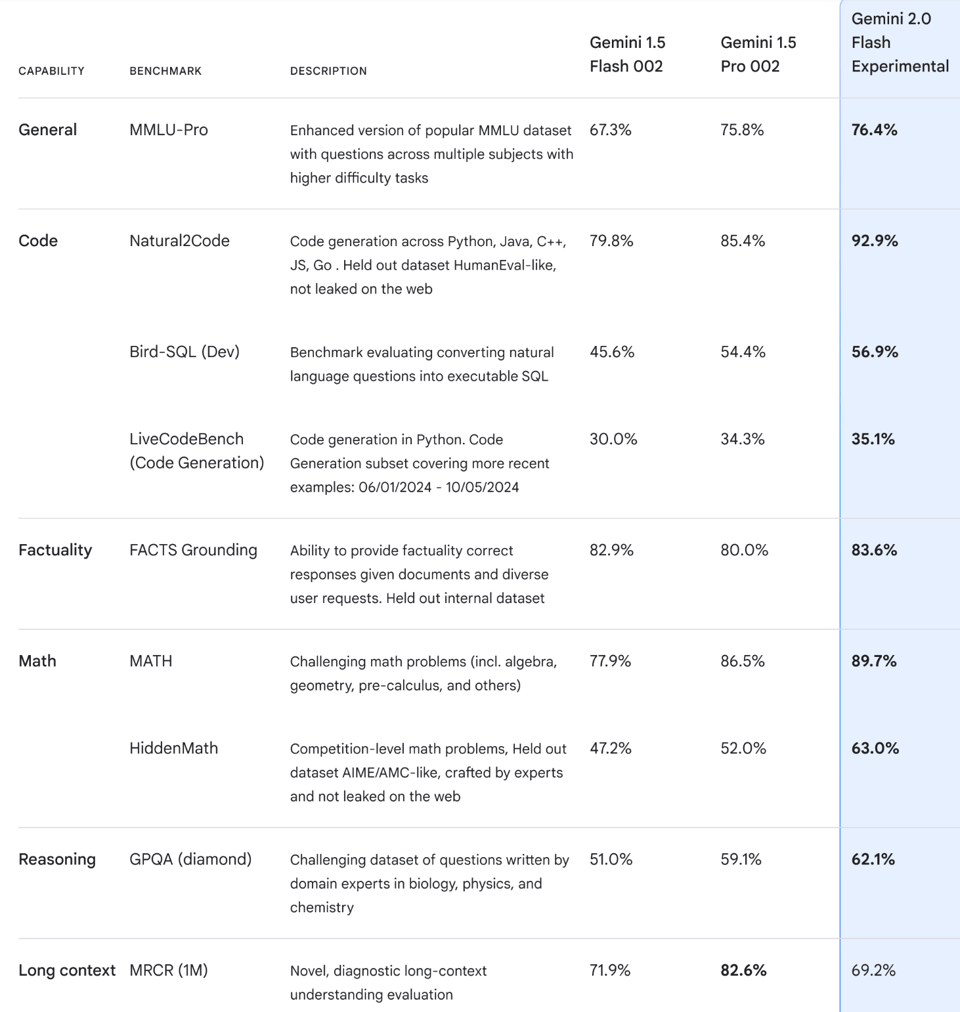

Google unveiled Gemini 2.0 Flash, highlighting its superior multimodal capabilities and enhanced coding performance compared to previous iterations. Community members have praised its robust coding functionalities. Some concerns were raised about its stability and effectiveness relative to other models, as discussed in multiple tweets.

Performance Boost with Named Results

Named results in Mojo allow direct writes to an address, avoiding costly move operations and providing performance guarantees during function returns. This feature enhances efficiency in scenarios where move operations are not feasible. Maya, an open source, multilingual vision-language model supporting 8 languages, has been introduced under the Apache 2.0 license. Maya's paper highlights its instruction-finetuned capabilities, and the community is eagerly awaiting a blog post and additional resources. Plans for Rerank 3.5 English Model are inquired about, with no responses provided as of now. Aya Expanse potentially benefits from the command family's performance, offering enhanced instruction following capabilities. Users have reported encountering persistent API 403 errors when utilizing the API request builder. Members are seeking high-quality datasets suitable for quantification, specifically interested in the 're-annotations' tag from the aya_dataset. The section discusses advancements in function calling for AI models, leveraging detailed function descriptions and signatures to improve generalization capabilities.

OpenAI Discussion Forums

This section provides insights from various channels in the OpenAI Discord server. It discusses integrations of AI models with Apple devices, performance issues with ChatGPT, the comparison of different AI tools like Gemini 2.0 Flash and Llama, and strategies for maximizing subscription usage. It also covers topics such as fine-tuning issues with Custom GPTs, API error handling, and platform outages in the AI discourse channels. Additionally, it delves into the Eleuther channel with discussions on neural network training dynamics, Muon optimizer performance, biases in large language models, regularization techniques, and gradient orthogonalization as a regularizer.

Training and Model Development Updates

This section provides insights into the latest advancements and discussions related to training neural networks and developing AI models. From optimizing traditional RNNs to addressing limitations in Transformer architectures, various links are mentioned that touch upon improving model performance, memory-intensive training of large language models, and stabilizing deep Transformers. Additionally, the section delves into specific discussions from different AI-related channels such as evaluating model perplexity, addressing slow inference processing, enhancing batch processing, and exploring token processing functions. These conversations shed light on challenges and solutions in fine-tuning models, managing memory during training, and utilizing different tools and datasets for AI development.

Stability.ai and Stable Diffusion

This section discusses various topics related to AI in the Stability.ai Discord channel. Users talk about VRAM usage and management, AI model recommendations for image enhancement, scalping GPUs, using AI for classification and tagging images, and voice training AI programs. The discussions cover how caching prompts in JSON format affect memory usage, using TTRPG rule books for custom games, dealing with scalpers purchasing GPUs, tools for image classification, and training AI for specific voices. These conversations showcase the diverse interests and applications of AI technology within the Discord community.

Choosing Discord Names and Open Hangouts

- A member shared a personal touch to their Discord profile name chosen by their daughter, sparking laughter and bonding in the community.

- An announcement was made for open hangouts scheduled on Thursday and Friday with a possible early start for paid participants.

- A member humorously expressed discomfort with the Whova app, leading to a discussion on app preferences within the group.

LLM Agents (Berkeley MOOC) MOOC Questions

Hackathon Submission Guidelines

It's confirmed that the hackathon submissions are due by December 17th, while the written article is due by December 12th, 11:59 PM PST. Students can submit one article for the hackathon, distinct from the 12 lecture summaries previously required.

Details on Written Article Assignment

Students must create a post of roughly 500 words on platforms like Twitter, Threads, or LinkedIn, linking to the MOOC website. The article submission is graded as pass or no pass, requiring the same email used for course sign-up to ensure credit.

API Key Usage Isn’t Mandatory

Participants are to utilize their personal API keys for lab assignments, but must not submit those keys. Clarification was given that having your own API key is acceptable for course work, even in the event of not receiving the OpenAI API key.

Anonymous Feedback Submission Available

An anonymous feedback form was created for course feedback and hackathon suggestions. Participants are encouraged to share their thoughts on the course via the feedback form.

Social Media Submission Allowed

Students can create and submit links to their articles posted on social media platforms, including a final draft submission.

LLM Agents (Berkeley MOOC) ▷ #mooc-lecture-discussion (2 messages)

The discussion in this section covers Function Calling in AI, ToolBench Platform, and Key Research Papers. It explores the detailed understanding of function descriptions and signatures in AI models, the emergence of the ToolBench project for training large language models, and the sharing of important research papers such as paper 1 and paper 2 for ongoing dialogue about their relevance in current topics.

Continuing Content

This section seems to be a continuation of the previous content, possibly discussing another aspect or detail. Although the specific details are not provided in this part, it serves as a bridge to the following information.

FAQ

Q: What are some recent highlights in the field of AI discussed in the essay?

A: Recent highlights in the field of AI include the launch of Google's Gemini 2.0 Flash model, integration of ChatGPT with Apple, performance of Claude 3.5 Sonnet, Google's advancements, discussions from the NeurIPS conference, and more.

Q: What is the significance of Google's Gemini 2.0 Flash model?

A: Google's Gemini 2.0 Flash model is praised for its superior multimodal capabilities, enhanced coding performance, and robust coding functionalities. There are some concerns about its stability and effectiveness compared to other models.

Q: What are some key features of the Windsurf Wave 1 update?

A: The Windsurf Wave 1 update integrates tools like Cascade Memories and automated terminal commands, boosts image input capabilities, and supports environments like WSL and devcontainers. Users are excited about the integration of Cascade Memories for automating terminal commands.

Q: What advancements have been made in the named results in Mojo feature?

A: The named results in Mojo feature allow direct writes to an address, avoiding costly move operations and providing performance guarantees during function returns. This enhances efficiency in scenarios where move operations are not feasible.

Q: What open source multilingual vision-language model has been introduced and under what license?

A: Maya, a multilingual vision-language model supporting 8 languages, has been introduced under the Apache 2.0 license. Maya's capabilities include instruction-finetuning and the community is eager for additional resources.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!