[AINews] LMSys killed Model Versioning (gpt 4o 1120, gemini exp 1121) • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recaps

Advanced Models and Capabilities

Hardware Solutions and Performance Optimizations Drive AI Efficiency

Scaling Laws Predict Model Performance

Discussions on ML-Related Questions

AI Discussions on Censorship

Exploration of API-Related Discussions

OpenRouter Update

Perplexity AI ▷ pplx-api

Cohere Discussions

MI300X Struggles and Issues in OpenAccess AI Collective

AI Twitter and Reddit Recaps

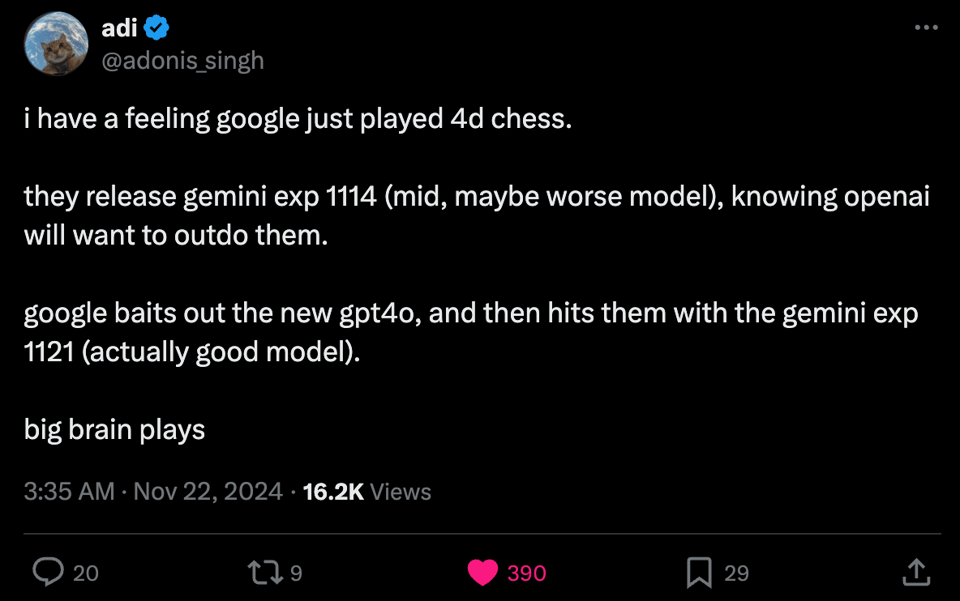

The AI Twitter recap discusses various themes including DeepSeek's R1 performance compared to OpenAI, Google's Gemini-Exp-1121 model enhancements, MistralAI's expansion, Claude Pro Google Docs integration, SmolTalk dataset unveiling, and LangGraph agents' voice capabilities. Additionally, Menlo Ventures' report on Generative AI evolution is highlighted, along with memes/humor focusing on challenges in AI development. The AI Reddit recap for /r/LocalLlama covers topics like the M4 Max 128GB running Qwen 72B Q4 MLX model efficiently, highlighting its power consumption, thermal performance, memory bandwidth, and model testing results.

Advanced Models and Capabilities

This section highlights the discussion surrounding the advancements in AI models and their enhanced capabilities. Tülu 3 is launched with superior performance over Llama 3.1, incorporating a novel Reinforcement Learning with Verifiable Rewards approach. Gemini Experimental 1121 excels in Chatbot Arena benchmarks, showcasing significant improvements in coding and reasoning capabilities. Open-source models like Qwen 2.5 32B achieve GPT-4o-level performance in code editing, emphasizing the importance of model quantization for varying performance levels.

Hardware Solutions and Performance Optimizations Drive AI Efficiency

The content discusses different hardware solutions and performance optimizations driving AI efficiency. It includes topics such as transitioning to cloud-based GPU renting to enhance model speed, YOLO's excellence in video object detection, and issues with MI300X GPUs experiencing critical hang issues. The section also covers comprehensive model evaluations and benchmark comparisons that highlight AI progress.

Scaling Laws Predict Model Performance

A paper introduces an approach using publicly available models to develop scaling laws for predicting language model performance based on scale. This method highlights variations in training efficiency and proposes that model performance depends on a low-dimensional capability space. Scaling law models are shown to be less costly than training full target models, with Meta reportedly spending only 0.1% to 1% of the target model's budget for such predictions.

Discussions on ML-Related Questions

Debate on Post-training Capabilities:

- A member raised the question of whether post-training merely surfaces existing capabilities or induces entirely new ones, highlighting a need for clarity in the ongoing discussion.

- Another member pointed out it could be both, especially as it often surfaces latent abilities within the model, prompting further debate.

The Role of SFT in Learning:

- Members discussed how Supervised Fine-Tuning (SFT) might both surface base model abilities and introduce new ones, depending on the quantity and quality of data used.

AI Discussions on Censorship

Users engaged in a debate regarding the necessity of censorship in AI to prevent detrimental uses. One member highlighted OpenAI's approach of implementing moderation to avoid legal issues. Various concerns were raised during the discussion, emphasizing the balance needed between freedom of speech and preventing harmful consequences.

Exploration of API-Related Discussions

This section delves into various discussions related to API usage and efficiency improvements within different Discord channels. Users shared experiences and sought assistance regarding topics such as product categorization with GPT-4, token reduction strategies, and prompt caching for efficiency. The focus was on optimizing token usage, exploring new features in AI models, and addressing challenges in model configurations. Additionally, there were exchanges on the nuances of API integrations and the impact of different provider choices on model performance. The community aimed at enhancing user interactions with AI tools while informing each other about innovative tools like uithub for GitHub interactions.

OpenRouter Update

Users have encountered confusion when selecting providers that support high context prompts on OpenRouter. The platform now automatically routes to providers that meet these requirements, filtering out those with limited context or output capabilities. Additionally, the Mistral Medium model has been deprecated, leading users to switch to Mistral-Large, Mistral-Small, or Mistral-Tiny. OpenRouter API documentation issues have been addressed to enhance clarity, especially regarding context window capabilities. A new Gemini Experimental model, Gemini Experimental 1121, has been introduced with coding, reasoning, and vision improvements. Discussions also covered file upload capabilities, community building in OpenRouter, and motivations behind its creation. Lastly, concerns were raised about usage limits on the main Claude app and the need for custom provider keys to overcome constraints.

Perplexity AI ▷ pplx-api

The section discusses various topics related to Perplexity AI, including API rate limits, using own API keys securely, and session management in frontend apps. Members are facing rate limit issues and seeking clarifications on the API. There are discussions on bringing personal API keys to Perplexity for alternative platforms, complying with security standards. Additionally, simplification of technical concepts like session management is explained through comparisons with cookies for authentication purposes.

Cohere Discussions

Members engaged in various discussions related to Cohere on the Discord server. The conversations included participation in the 30 Days of Python challenge, a preference for using the Go programming language for a capstone project, exploring the Cohere GitHub repository for contributions and feedback, utilizing the Cohere Toolkit for RAG applications, and accessing starter code available in notebooks for Cohere. Additionally, there were discussions about the upcoming launch of multimodal embeddings, innovative research agents utilizing Cohere's technology, and efforts to address rate limit concerns for efficient article production.

MI300X Struggles and Issues in OpenAccess AI Collective

A member reported experiencing intermittent GPU hangs while conducting longer runs on a standard ablation set with axolotl. These issues seem to occur past the 6-hour mark, raising concerns about the stability of longer training sessions with MI300X. The member noted that the GPU hangs do not appear during shorter runs, sparking discussions on tracking concerns on GitHub. The ongoing issues with GPU hang HW Exceptions during MI300X runs have been formally recorded on GitHub to address technical context such as loss and learning rate.

FAQ

Q: What are the key advancements in AI models discussed in the essai?

A: The essai discusses advancements such as the launch of Tülu 3 with superior performance, Gemini Experimental 1121 excelling in Chatbot Arena benchmarks, and open-source models like Qwen 2.5 achieving GPT-4o-level performance in code editing.

Q: What hardware solutions and performance optimizations are highlighted in the essai for driving AI efficiency?

A: The essai covers transitioning to cloud-based GPU renting, YOLO's excellence in video object detection, and issues with MI300X GPUs experiencing critical hang issues.

Q: What approach is introduced in a paper discussed in the essai for predicting language model performance?

A: The essai introduces an approach using publicly available models to develop scaling laws for predicting language model performance based on scale, highlighting variations in training efficiency and proposing that model performance depends on a low-dimensional capability space.

Q: What debates and discussions are highlighted in the essai regarding AI capabilities and learning?

A: Discussions revolve around post-training capabilities, including whether they surface existing capabilities or induce entirely new ones. There are also debates on the role of Supervised Fine-Tuning (SFT) in learning and how it might surface base model abilities and introduce new ones.

Q: What concerns are raised in the essai regarding API usage and efficiency improvements within different Discord channels?

A: Concerns raised include product categorization with GPT-4, token reduction strategies, prompt caching for efficiency, and the impact of different provider choices on model performance.

Q: What issues are discussed related to provider selection on OpenRouter in the essai?

A: The essai discusses confusion when selecting providers supporting high context prompts on OpenRouter, platform routing to providers meeting context requirements, deprecation of Mistral Medium model, and the introduction of Gemini Experimental 1121.

Q: What are the key topics covered related to Perplexity AI in the essai?

A: Topics include API rate limits, secure usage of API keys, session management in frontend apps, and simplification of technical concepts like session management.

Q: What discussions are highlighted in the essai regarding Cohere on the Discord server?

A: Discussions include participation in programming challenges, preference for programming languages, contributions to GitHub repositories, utilization of the Cohere Toolkit, upcoming launch of multimodal embeddings, innovative research agents, and addressing rate limit concerns.

Q: What technical issues are reported in the essai related to GPU hangs during longer runs with MI300X?

A: The essai reports intermittent GPU hangs past the 6-hour mark during standard ablation set runs with MI300X, sparking discussions on tracking concerns on GitHub and addressing technical context during GPU hang HW Exceptions.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!