[AINews] Meta Apollo - Video Understanding up to 1 hour, SOTA Open Weights • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recaps

Hugging Face Synthetic Data Generator and High Performance Benchmarks

OpenRouter (Alex Atallah) Discord

Discord Discussions on Windsurf Issues and Tools Comparison

Notebook LM Discord Use-Cases

AI Regulation and Industry Impact Discussions

Implementation on GPU, Physics Drives Most AI Techniques, Trellis Coding, Trade-offs in Model Compression, Redundant Information in MLPs

StabilityAI: Discussion Forums Highlights

Stable Diffusion and AI Applications

Interconnects (Nathan Lambert) Messages

LlamaIndex Blog

Discussion Highlights

AI Twitter and Reddit Recaps

The AI Twitter and Reddit Recaps section provides a detailed overview of recent discussions in the AI community. It includes updates on AI model releases such as Google Deepmind's Veo 2 and OpenAI's ChatGPT, Meta's Apollo release, research developments like Llama 70B performance and expanded language support, industry news like Figure AI progress and Klarna's AI integration, insights on LLM self-recognition and video vs text processing, and humorous takes on AI-related topics. The Reddit recap delves into discussions on Meta's Apollo Multimodal Models, local execution, VRAM efficiency, and critiques of Chain of Thought prompts. The recap offers insight into groundbreaking advancements, debates, challenges, and community interactions within the AI landscape.

Hugging Face Synthetic Data Generator and High Performance Benchmarks

The section discusses the launch of the Synthetic Data Generator by Hugging Face, a new tool for creating datasets under an Apache 2.0 license. It supports tasks like Text Classification and Chat Data for Supervised Fine-Tuning, offering features such as local hosting and compatibility with OpenAI APIs. The tool allows datasets to be pushed to the Hugging Face Hub or Argilla, showcasing successful results with improved data diversity techniques. Additionally, the section delves into high-performance benchmarks, comparing the Intel B580 and A770 performance on Windows. The B580 outperforms A770 in benchmarks, sparking discussions on unexpected performance results and the impact of driver updates. Users express concerns over market scalping and discuss driver impacts on system performance and configurations. The section sheds light on the potential of AI models and workflow applications like N8N, Omnichain, and Wilmer in managing multi-step reasoning processes effectively.

OpenRouter (Alex Atallah) Discord

The discussion in the OpenRouter (Alex Atallah) Discord channel covered various topics including the integration of SF Compute as a new provider, a significant price reduction for Qwen QwQ, and the launch of new Grok models by xAI. Additionally, a new API wrapper for OpenRouter was introduced, and the performance of Hermes 3 405B model was discussed. These conversations highlighted the developments and challenges within the OpenRouter ecosystem.

Discord Discussions on Windsurf Issues and Tools Comparison

- Users in the #discussion channel of Codeium / Windsurf reported various issues with Windsurf, such as freezing and malfunctioning actions and chat windows. Recommendations included reinstalling or refreshing settings.

- Concerns were raised about the increasing dependency on AI tools like Claude, with users discussing the risks of relying solely on AI for coding tasks and the importance of maintaining personal coding skills.

- A comparison was made between Codeium and Gemini 2.0, noting that while Gemini may offer better coding performance, it lacks some features present in Claude. Benchmarks were shared to compare the tools' performance based on specific use cases.

- The discussion in the #windsurf channel explored topics like the Model Context Protocol (MCP) for standardized function calls, using tools like Playwright and MCP for GUI testing, and Ruff Linter for Python code formatting. Users exchanged tips on excluding certain files and maintaining clean code standards.

Notebook LM Discord Use-Cases

Notebook LM Discord Use-Cases (96 messages🔥🔥):

-

Notebook LM Podcast Features Explored: The latest features of Notebook LM have been discussed, including customizations and interactive functionalities that enhance the user experience. Members shared links to podcasts showcasing these features, asserting that the application is changing the landscape of audio content.

-

Customizing AI Outputs for Unique Styles: Users highlighted the importance of good prompting and custom functions to tailor AI outputs, which can result in varied tones and styles. A shared YouTube video provided tips on effective prompting techniques for artistic results.

-

Bilingual and Multilingual Uses of AI Tools: Questions arose regarding how to utilize Notebook LM in different languages, with suggestions on instructing the AI to respond in specific languages. Users shared methods to prompt the AI for multilingual outputs, emphasizing the necessity of proper configuration.

-

Creating Engaging Content with AI: Conversations emerged around generating captivating audio narratives and content using AI, which seemed to resonate well with listeners. A variety of content styles, including those mimicking famous figures and ASMR tones, were experimented with to enhance audience engagement.

-

Exploring AI's Capability in Passing the Turing Test: Members discussed the challenges AIs face in passing the Turing Test and the importance of conversational tone adaptation. Experiments were shared, showcasing how different character moods can influence AI's conversational style and its perceived intelligence.

AI Regulation and Industry Impact Discussions

Members engaged in discussions regarding AI regulation and its impact on industries. There was skepticism about the government's ability to regulate AI effectively, with comparisons to past efforts with social media. The visible gains from AI in various industries were acknowledged, highlighting AI as a tool that enhances productivity. Suggestions were made to establish a nuclear treaty analogy for AI governance to ensure safety and accountability. Concerns were raised about the long-term societal implications of AI advancements and whether humanity will be able to manage future smart systems. Additionally, a link was provided for further reading on merging models in AI systems.

Implementation on GPU, Physics Drives Most AI Techniques, Trellis Coding, Trade-offs in Model Compression, Redundant Information in MLPs

The paper delves into implementing decompression efficiently on a GPU, emphasizing the simplicity despite its difficult readability. The discussion highlights the origin of many AI techniques in Physics, emphasizing the relevance of communication theory concepts, especially for LLMs. A historical account of trellis coding's inventor showcases the slow but impactful progression of technology ideas. Strategies for maintaining model integrity while updating Loras reveal trade-offs between training time and memory efficiency, suggesting a periodic reinitialization approach. A discussion on redundant information in MLPs explores the lack of explored position invariance, hinting at opportunities for simplification and potential implications for model compression strategies.

StabilityAI: Discussion Forums Highlights

It's not just a browser concern, as issues with updates not reflecting on the front-end were raised. Users discussed strategies for prompting Bolt effectively, shared community support tips, and troubleshooting suggestions. The community is eagerly anticipating new features and integration updates on Bolt.new/Stackblitz. Members in the Latent Space chat reviewed Grok-2 enhancements, Ilya Sutskever's NeurIPS talk insights, Google's Veo 2 and Imagen 3 unveiling, Byte Latent Transformer, and Search in Voice mode. In Latent Space's AI-in-action-club, discussions centered on NeurIPS Webcrawl implications, prompt engineering, AI functions by Marvin, SillyTavern tool for LLM testing, and insights on Entropix. LM Studio chat covered multimodal models, limitations on fine-tuning, uncensored chatbot options, RAG implementation, and upcoming software updates. In the LM Studio hardware chat, topics included PSU ratings, Radeon VII GPU issues, GPU selection for AI tasks, model usage optimization, and comparing GPU upgrades and costs.

Stable Diffusion and AI Applications

The section discusses various topics related to Stable Diffusion and AI applications, including Face Swapping with Reactor Extension for image manipulation, recommendations for Stable Diffusion models like Flux and Pixelwave, learning resources for Stable Diffusion, upscaling generated images, and engagement in other topics like US stocks. The section also mentions links to related resources and tools for Stable Diffusion models.

Interconnects (Nathan Lambert) Messages

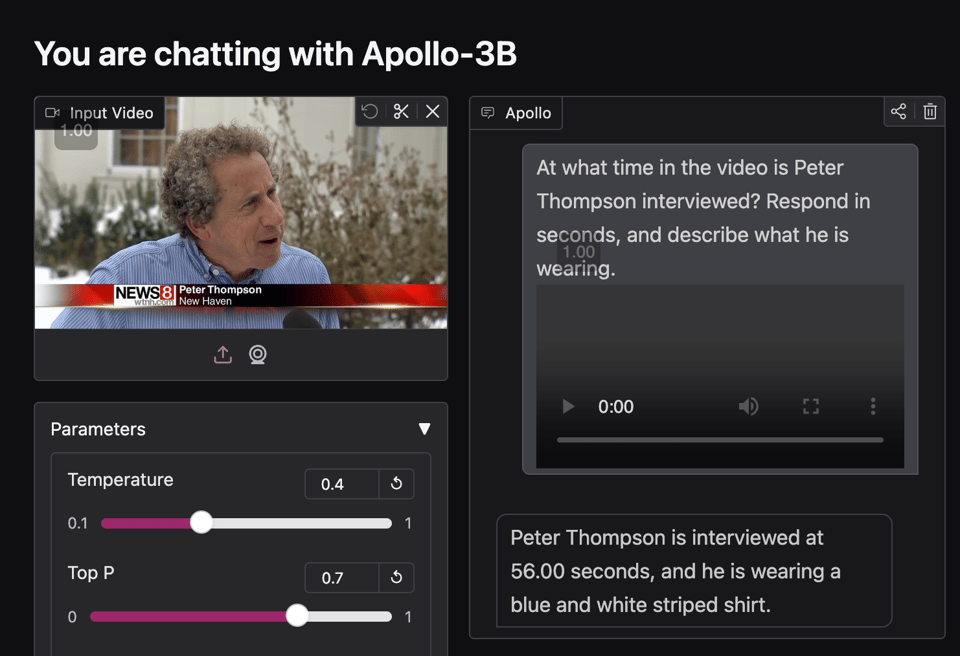

Apollo Video LLMs challenge competitors:

- The Apollo series of video LLMs from Meta shows strong performance, comparable to llava-OV and Qwen2-VL. Critically, their own performance metrics were emphasized while neglecting to highlight the best in each section, complicating the comparison.

Surprising LLM choice for Apollo:

- Interestingly, Apollo uses Qwen2.5 as its underlying LLM instead of the more expected Llama. This decision raises questions about the selection of models for optimal performance.

Performance chart provides clarity:

- A chart detailing the state-of-the-art (SOTA) performance in each section was shared, highlighting the best across all models. The strongest performance is underlined while key metrics are shown in bold for easy reference.

Apollo aims to improve video understanding:

- The research includes a systematic exploration of the design space for video-LMMs, uncovering critical factors that drive performance. Insights gained aim to be actionable for the community pursuing advancements in video understanding.

LlamaIndex Blog

The LlamaIndex Blog section covers various tutorials and workflows related to LlamaIndex functionalities. It includes tutorials on mastering LlamaIndex in five lines of code, ensuring contract compliance effortlessly, and streamlining patient case summaries using agentic workflows. Each tutorial emphasizes different aspects of LlamaIndex functionality and provides detailed insights into how to leverage its capabilities for specific tasks.

Discussion Highlights

This section includes various discussions and inquiries from different AI-related channels on Discord. Some topics discussed include folder creation issues, API response problems, billing tracking for Litellm, learning Japanese apps, using OS locally, optimization of Claude Sonnet prompt with DSpy, caution advised on outdated examples, APOLLO optimizer showcasing memory efficiency, challenges in LLM training, Multi-turn KTO discussion, progressive tokenization explained, analysis of wavelet coefficients, Byte Latent Transformer Patches outperforming tokens, exploring large concept models in NLP, final December event on RAG application by Mozilla AI, and an update regarding Gorilla LLM's Berkeley Function Calling leaderboard.

FAQ

Q: What is the importance of the Synthetic Data Generator by Hugging Face?

A: The Synthetic Data Generator by Hugging Face is a new tool for creating datasets under an Apache 2.0 license. It supports tasks like Text Classification and Chat Data for Supervised Fine-Tuning, offering features such as local hosting and compatibility with OpenAI APIs.

Q: What were the discussions in the OpenRouter Discord channel?

A: Conversations in the OpenRouter Discord channel covered topics such as the integration of SF Compute as a new provider, a significant price reduction for Qwen QwQ, the launch of new Grok models by xAI, introducing a new API wrapper for OpenRouter, and discussing the performance of the Hermes 3 405B model.

Q: What are some concerns raised about the dependency on AI tools like Claude?

A: Concerns were raised about the increasing dependency on AI tools like Claude, with users discussing the risks of relying solely on AI for coding tasks and the importance of maintaining personal coding skills.

Q: What topics were explored in the Notebook LM Discord Use-Cases section?

A: Topics explored in the Notebook LM Discord Use-Cases section include customizing AI outputs for unique styles, bilingual and multilingual uses of AI tools, creating engaging content with AI, exploring AI's capability in passing the Turing Test, and discussions on AI regulation and its impact on industries.

Q: What advancements were discussed in the Stable Diffusion and AI applications section?

A: The Stable Diffusion and AI applications section discussed topics such as Face Swapping with Reactor Extension for image manipulation, recommendations for Stable Diffusion models like Flux and Pixelwave, learning resources for Stable Diffusion, upscaling generated images, and engagement in other topics like US stocks.

Q: What was the focus of the Apollo Video LLMs challenge competitors section?

A: The Apollo Video LLMs challenge competitors section focused on the performance of Meta's Apollo series of video LLMs compared to llava-OV and Qwen2-VL. It highlighted performance metrics and raised questions about the choice of LLM models.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!