[AINews] Meta BLT: Tokenizer-free, Byte-level LLM • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

Azure AI and Phi-4 GGUF Model

Developments in Discord Communities

OpenAI, Gemini, Anthropic, and More

Aider and Paul Gauthier

Aider Paul Gauthier Questions and Tips

Hardware Discussion in LM Studio

GPU MODE - General GPU Discussions

Challenges and Solutions in AI Conversations

Cohere API Discussions

Interconnects: Discussions on AI Marketing, Empathy Consultants, and ML Models

DSPy, Torchtune, and Mozilla AI Discussions

AI Twitter and Reddit Recap

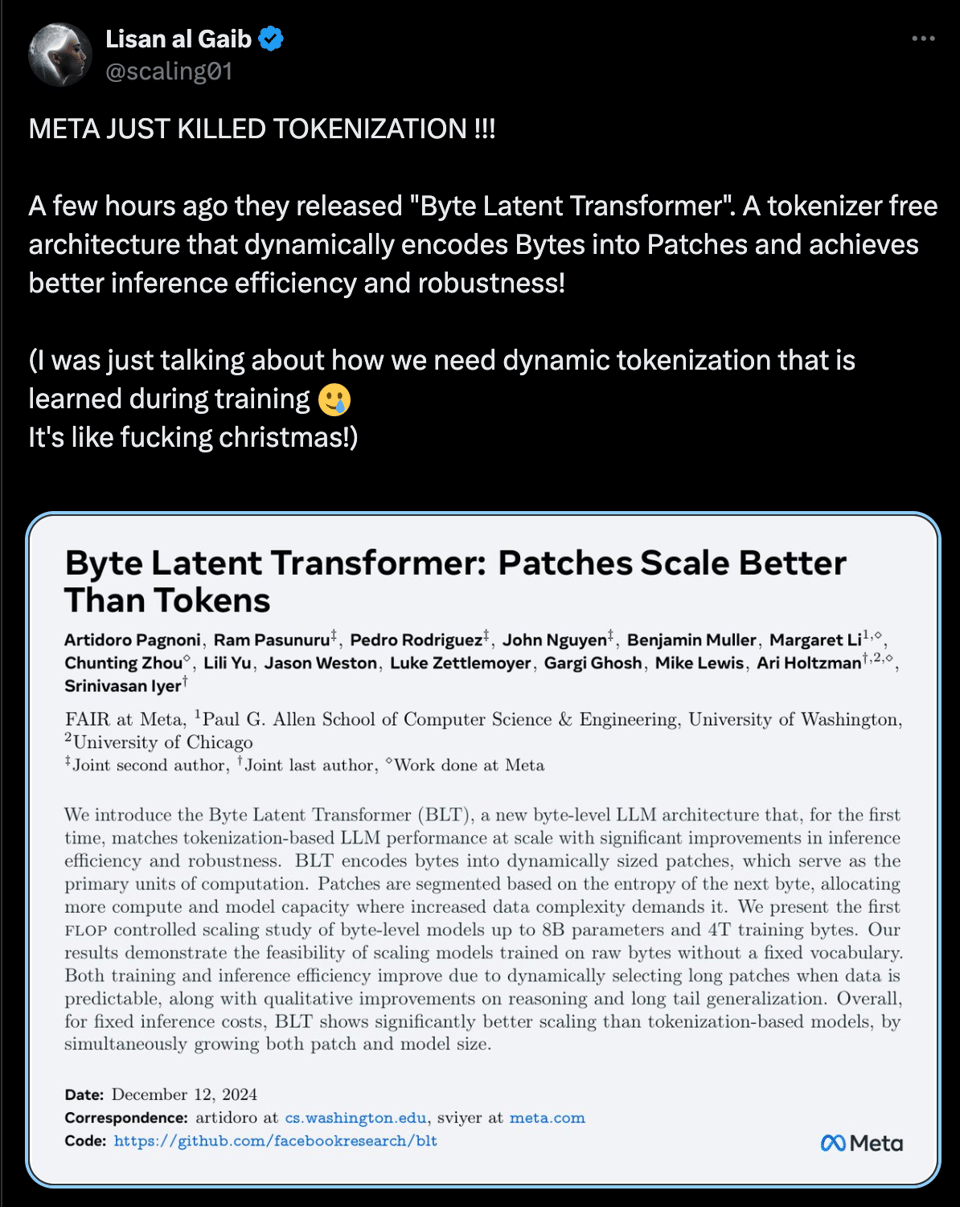

The AI Twitter and Reddit recap section provides a detailed overview of recent discussions and developments in the AI community. On Twitter, key topics include new model and research announcements like Microsoft Phi-4, Meta Research's Byte Latent Transformer, and DeepSeek-VL2. Product launches and updates from OpenAI, Cohere, and Anthropic Research are also highlighted. Discussions on industry trends, benchmark performance, and humor in the AI space are covered. Moving to Reddit, the /r/LocalLlama recap delves into discussions surrounding the release of Microsoft Phi-4, with a focus on benchmark results, skepticism about real-world applications, and the role of synthetic data in training. User comments reflect on the model's performance, development, availability on platforms like Hugging Face, and its potential impact on various tasks.

Azure AI and Phi-4 GGUF Model

Users can download the Microsoft Phi-4 GGUF model, converted from Azure AI Foundry, on Hugging Face. The model offers various quantizations like Q8_0, Q6_K, Q4_K_M, and f16, with more quantizations in the pipeline. Phi-4 shows significant improvements over Phi-3, particularly in multilingual tasks. It faces competition from models like Qwen 2.5 14B in certain areas. The model is accessible on Hugging Face and Ollama under the Microsoft Research License Agreement for non-commercial use. Technical testing demonstrates the model's efficiency in LM Studio with AMD ROCm setup achieving 36 T/s on an RX6800XT. The performance fits well within the 16K context on a 16GB GPU.

Developments in Discord Communities

The various Discord channels discussed a range of topics such as Cursor IDE's performance advantages over Windsurf, payment struggles with Cursor, model limits, and coding capabilities. Eleuther Discord members engaged in GPU cluster disruptions and grading fantasy character projects. OpenAI Discord featured discussions on ChatGPT features, AI content quality, and local tool adoption. LM Studio Discord covered topics like GPU recommendations and memory overclocking. Latent Space Discord shared updates on language models like Qwen 2.5 Turbo and Codeium. Bolt.new/Stackblitz Discord addressed memory clearance experiments and integrating platforms like Supabase and Stripe. GPU MODE Discord delved into PyTorch quantitative training, Triton issues, and GPU glossary. Nous Research AI Discord highlighted new models like Phi-4, DeepSeek-VL2, and SONAR's tokenization breakthrough. Unsloth AI Discord discussed Phi-4's release, Command R7B efficiency, and multi-GPU training support. Cohere Discord focused on Command R7B's launch, API errors, and model performance metrics. Perplexity AI Discord introduced international Campus Strategist programs, usability challenges, and image generation issues. OpenRouter Discord added a new provider filter feature and recovered from API uptime issues during AI Launch Week.

OpenAI, Gemini, Anthropic, and More

The section discusses issues faced by various AI providers like OpenAI, Gemini, Anthropic, and more. OpenAI's API experienced downtime, Gemini encountered bugs with their Flash 2.0 version, Euryale model had performance issues, and custom provider keys access is set to be launched. The Mojo community discussed networking strategies, GPU performance, Mojo's integration with MLIR, and identity. In the Interconnects Discord, Microsoft Phi-4 surpassed GPT-4o, LiquidAI raised $250M, DeepSeek VL2 was introduced, Tulu 3 explored post-training techniques, and a reversal trend was seen in language model sizes. LLM Agents Discord discussed course-related matters like deadlines, certificate requirements, and public links guideline. Stability.ai Discord covered issues with WD 1.4, recommendations for models, and discussions on Stable Diffusion XL Inpainting. OpenInterpreter Discord highlighted setups, API integration, token limit functionality, advancements in the development branch, and Meta's Byte Latent Transformer. LlamaIndex Discord shared updates on LlamaCloud and AI-related topics. DSPy Discord talked about DSPy framework, AI categorization, Cohere v3 performance, AI system development, and prompt optimization. Torchtune Discord discussed Torchtune 3.9, Python's type system challenges, Ruff automation, and more. MLOps @Chipro Discord previewed upcoming sessions on advanced retrieval systems, agent runtimes, model management, prompt engineering, and AI compliance. Lastly, Mozilla AI Discord covered events like Demo Day, contributors' appreciations, social media impact, and availability of event videos.

Aider and Paul Gauthier

Combining Aider with ChatGPT Pro: Users have found success in using Aider in conjunction with ChatGPT Pro, optimizing their workflows for coding tasks. This combination allows for efficient copypasting commands between Aider and ChatGPT during the coding process.

Gemini's Effectiveness in Code Review: Gemini 2.0 Flash has been highlighted for its capability to process large pull requests effectively, enhancing efficiency during reviews. Users have expressed satisfaction with Gemini's performance, particularly in managing extensive codebases.

Fine-Tuning Models for Recent Libraries: One user shared their experience of successfully fine-tuning models to update knowledge on recent libraries by condensing documentation into relevant contexts. This approach improved the model's performance significantly when dealing with newer versions of libraries.

Challenges with Using Aider: Some users reported issues with the O1 Pro model, leading them to revert to O1 Preview or Sonnet for more reliable performance. Despite challenges, the integration of features like auto-testing and watch-files with Aider has prompted discussions on enhancing developer productivity.

LLM Performance Comparison: There was a discussion about finding reliable leaderboards for comparing the performance of large language models on coding tasks. Users pointed out that many existing leaderboards may be biased due to prior learning from contaminated datasets, suggesting alternatives like livebench.ai for more accurate comparisons.

Aider Paul Gauthier Questions and Tips

- Aider struggles with file management in architectural mode: Users reported issues with Aider not prompting to add files it needs to edit, creating confusion during scripting attempts for automated code cleanup. One user mentioned their experience with expected behavior fluctuating, with some noticing Aider occasionally fails to request the addition of necessary files.

- Integrating Obsidian for better project workflows: Members discussed using Obsidian to track planning files while highlighting the usability of integration with their workflows. One user suggested that using mermaid could enhance visual workflow organization, with links to helpful resources being shared.

- Interest in Fast Apply model for code edits: A user expressed curiosity about the Fast Apply model for boosting coding efficiency, specifically in editing large portions of code. Questions arose regarding prior implementations within Aider and potential for integrating it with existing projects.

- Comparative analysis of Claude AI and free models: A user inquired about how Claude AI compares to free models like Gemini and LLaMA, seeking insights for Aider applications. This led to discussions about different model capabilities likely influencing performance in various coding tasks.

- Rust-analyzer highlights issues due to external edits: A user sought advice on how to keep Rust-analyzer updated with changes made by Aider, particularly in relation to highlighting errors. They reported attempts using Cargo commands but found that changes had not reflected accurately within their development environment.

Hardware Discussion in LM Studio

In the LM Studio hardware discussion, various topics related to hardware considerations for AI work were explored. Members discussed the value for money of GPUs like the RTX 3060 compared to the 3070 and 3090. There were comparisons between AMD and Intel performance, highlighting the advantages of VRAM capacity in specific workloads. The importance of selecting the right power supply units (PSUs) and memory overclocking for optimization was emphasized. Additionally, challenges related to model requirements for AI training, including loading LLM models and keeping drivers updated, were shared.

GPU MODE - General GPU Discussions

- Discusses the integration of supabase in http://bolt.new undergoing code review and final improvements.

- Explores edge functions creation in bolt and modifying existing GitHub repositories with BoltSync.

- Provides resources for integrating Stripe with Bolt.New and showcases a dating app built with Bolt.new, ChatGPT, and Make.com.

- Mentions the launch of bolt.diy for creating full-stack web applications using any LLM.

- Highlights the oTTomator Community for AI-driven automation.

- Discusses various GPU topics including SSD recommendations, tensor operations, and sequence packing.

- Examines the benefits of sequence packing and tensor contiguity for GPU performance.

- Explores memory considerations in models and the impact of tensor reshaping on performance metrics.

Challenges and Solutions in AI Conversations

- Using adapters to improve performance and efficiency is suggested over training new models from scratch.

- Corrections for difficult tokens maintain quality in model outputs without heavily impacting original intentions.

- Inquiry for high-quality datasets for AI, specifically for reasoning, math, or coding tasks, as alternatives to existing simpler datasets.

- Discussions on continuous fine-tuning, local LLM capabilities, machine learning study paths, quantization comparison, and open-source coding LLMs integration with IDEs.

- Introduction of new language models like Phi-4 and DeepSeek-VL2, Meta's tokenization breakthrough, GPU glossary launch, and Byte Latent Transformers.

- Ongoing discussions on quantization drawbacks, anticipation for Phi-4 release, Command R7B performance, multi-GPU support in Unsloth, and challenges with vision models and quantization.

Cohere API Discussions

The discussion in the API-discussions channel includes topics such as community access issues, ClientV2 installation confusion, sharing of the GitHub resource for the Cohere Python library, discrepancies in model card information, and observations regarding model file sizes. Members provide solutions like updating the pip package, accessing the GitHub repository, and acknowledging and addressing reported discrepancies. The dialogue showcases a supportive community assisting with various API-related challenges and sharing resources for better utilization of Cohere tools.

Interconnects: Discussions on AI Marketing, Empathy Consultants, and ML Models

This section delves into various discussions within the 'Interconnects' community led by Nathan Lambert. It covers topics such as concerns around the 'Bitter Lesson', the use of personal scenarios in AI ads, and suggestions for hiring empathy consultants in AI companies. Additionally, it explores the launch of DeepSeek-VL2 with new ML features and the potential of models like '4.5A27.5B' and 'Tiny: 1A3.4B'. The section also mentions links to related tweets and GitHub repositories, showcasing the community's interest and engagement in AI advancements.

DSPy, Torchtune, and Mozilla AI Discussions

- DSPy Discussions: The section covers topics related to the DSPy framework, including its integration with MegaParse, document parsing necessity, and user feedback on DSPy.

- Torchtune Discussion: Users discuss Torchtune's upgrade to 3.9 and its impact on simplifying type hinting in Python.

- MLOps @Chipro Events: Details about upcoming sessions on next-gen retrieval, advanced agent runtimes, model management at scale, dynamic prompt engineering, and AI safety & compliance.

- Mozilla AI Announcements: A recap of Mozilla Builders Demo Day, special thanks to contributors, social media highlights, and availability of an event video for viewing.

FAQ

Q: What are some of the recent model and research announcements in the AI community discussed in the essai?

A: Recent model and research announcements in the AI community include Microsoft Phi-4, Meta Research's Byte Latent Transformer, and DeepSeek-VL2.

Q: Where can users download the Microsoft Phi-4 GGUF model?

A: Users can download the Microsoft Phi-4 GGUF model, converted from Azure AI Foundry, on Hugging Face.

Q: What quantizations does the Microsoft Phi-4 model offer?

A: The Microsoft Phi-4 model offers various quantizations like Q8_0, Q6_K, Q4_K_M, and f16, with more quantizations in the pipeline.

Q: What are some of the challenges faced by AI providers like OpenAI, Gemini, and Anthropic mentioned in the essai?

A: Challenges faced by AI providers include OpenAI's API downtime, bugs encountered by Gemini with their Flash 2.0 version, performance issues with the Euryale model, and the upcoming launch of custom provider keys access.

Q: What is the integration of Aider with ChatGPT Pro used for according to the essai?

A: The integration of Aider with ChatGPT Pro is used to optimize workflows for coding tasks, allowing for efficient copypasting of commands during the coding process.

Q: What were the main discussions in the 'LM Studio hardware discussion' mentioned in the essai?

A: The 'LM Studio hardware discussion' covered topics related to hardware considerations for AI work, such as comparisons between GPUs like RTX 3060, 3070, and 3090, AMD and Intel performance, value for money, and challenges related to model requirements for AI training.

Q: What topics were discussed in the API-discussions channel mentioned in the essai?

A: Topics discussed in the API-discussions channel include community access issues, ClientV2 installation confusion, discrepancies in model card information, and solutions provided for these challenges.

Q: What are some of the key updates shared in the 'Interconnects' community led by Nathan Lambert according to the essai?

A: Key updates include discussions around the 'Bitter Lesson', personal scenarios in AI ads, new ML features in DeepSeek-VL2, and the potential of models like '4.5A27.5B' and 'Tiny: 1A3.4B'.

Q: What was discussed in the section 'DSPy Discussions' outlined in the essai?

A: The 'DSPy Discussions' section covers topics related to the DSPy framework, including integration with MegaParse, document parsing necessity, and user feedback on DSPy.

Q: What details were mentioned about Mozilla AI in the essai?

A: Details about Mozilla AI include a recap of Mozilla Builders Demo Day, special thanks to contributors, social media highlights, and the availability of an event video for viewing.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!