[AINews] Olympus has dropped (aka, Amazon Nova Micro|Lite|Pro|Premier|Canvas|Reel) • ButtondownTwitterTwitter

Chapters

AI Reddit Recap: /r/LocalLlama Recap

New Tools: Open-WebUI Enhanced with Advanced Features

OpenAI Discord

LLM Studio Features and Discussions

Nous Research AI General Messages

Compile-time Metaprogramming and User Experiences

OpenRouter & OpenAI Discussions

Insights on GPU Performance and New Image Synthesis Model

GEMM Toolkit Updates, Runtime Error with Meta Tensors, GPU Mode Discussions

LLM Agents (Berkeley MOOC) Hackathon Announcements

Performance Optimization Discussions and New Models

AI Reddit Recap: /r/LocalLlama Recap

Theme 1: HuggingFace Imposes 500GB Limit, Prioritizes Community Contributors

- HuggingFace Storage Limit Update: HuggingFace implemented a 500GB storage limit for free-tier accounts, changing from unlimited storage. Users discussed the impact on model storage and expressed concerns about availability of large models like LLaMA 65B. The community engaged in debates about business implications and potential solutions, such as local storage and torrents. VB from HuggingFace clarified that the update was related to existing limits and highlighted the continued support for valuable community contributions like model quantization and fine-tuning. Discussions revolved around balancing misuse prevention while supporting genuine community involvement.

New Tools: Open-WebUI Enhanced with Advanced Features

The author developed several tools for Open-WebUI, including an arXiv Search tool, Hugging Face Image Generator, and various function pipes like a Planner Agent using Monte Carlo Tree Search and Multi Model Conversations supporting up to 5 different AI models. The AI stack includes Ollama, Open-webUI, OpenedAI-tts, ComfyUI, n8n, quadrant, and AnythingLLM, primarily using 8B Q6 or 14B Q4 models with 16k context. Users suggested using Python 3.12 to enhance performance, though the developer indicated time constraints for implementation. Interest was expressed in the Monte Carlo Tree Search (MCTS) implementation for research summarization. The free version of GPT-4o is likely the 4o-mini variant, with users noting its performance limitations compared to QwQ based on benchmark results.

OpenAI Discord

Italy announced plans to ban AI platforms like OpenAI unless users can request data removal, sparking debates on regulatory effectiveness. Users reported malfunctions in ChatGPT Plus features post-subscription. A GPT designed for billing hours faced functionality issues and sparked humorous speculations. Custom instructions are being used to tailor ChatGPT's writing style for users. AI engineers seek resources to enhance prompt engineering skills for ChatGPT customization.

LLM Studio Features and Discussions

Discussions in the LLM Studio Discord channel include queries about Qwen LV 7B vision functionalities and the impact of FP8 quantization on model efficiency. Members also discuss the support for Docker containers in HF spaces, skepticism around Intel Arc Battlemage cards for AI tasks, and performance issues of LM Studio on Windows systems. Additionally, users inquire about embedding model token size limitations and seek clarity on the RAG implementations in DSPy for customizing datasets.

Nous Research AI General Messages

- DisTrO Training Update: Current training run aiming to finish soon, details on hardware and user contributions expected.

- Test run with no immediate public registry or tutorials available.

- Using Smaller Models for Different Tasks: Benefits of smaller models in creative tasks, suggested balanced approach with larger models.

- Function Calling vs. MCP in AI Models: Method comparison highlighting the need for clarification on uses.

- Community Contributions to AI Training: Discussion on decentralized training challenges and importance of efficient large-scale training methods.

- Job Opportunities in AI Development: Recruitment call for AI company and query on model availability.

- Links mentioned:

- Papers with Code - ARC Dataset

Compile-time Metaprogramming and User Experiences

This section discusses various topics related to compile-time metaprogramming and user experiences with different AI tools discussed in the forum posts.

- The analysis of OpenRouter's benchmark results reveals performance issues sparking user discussions on solutions for improvement.

- Users share positive experiences with Aider's new features enhancing user experience.

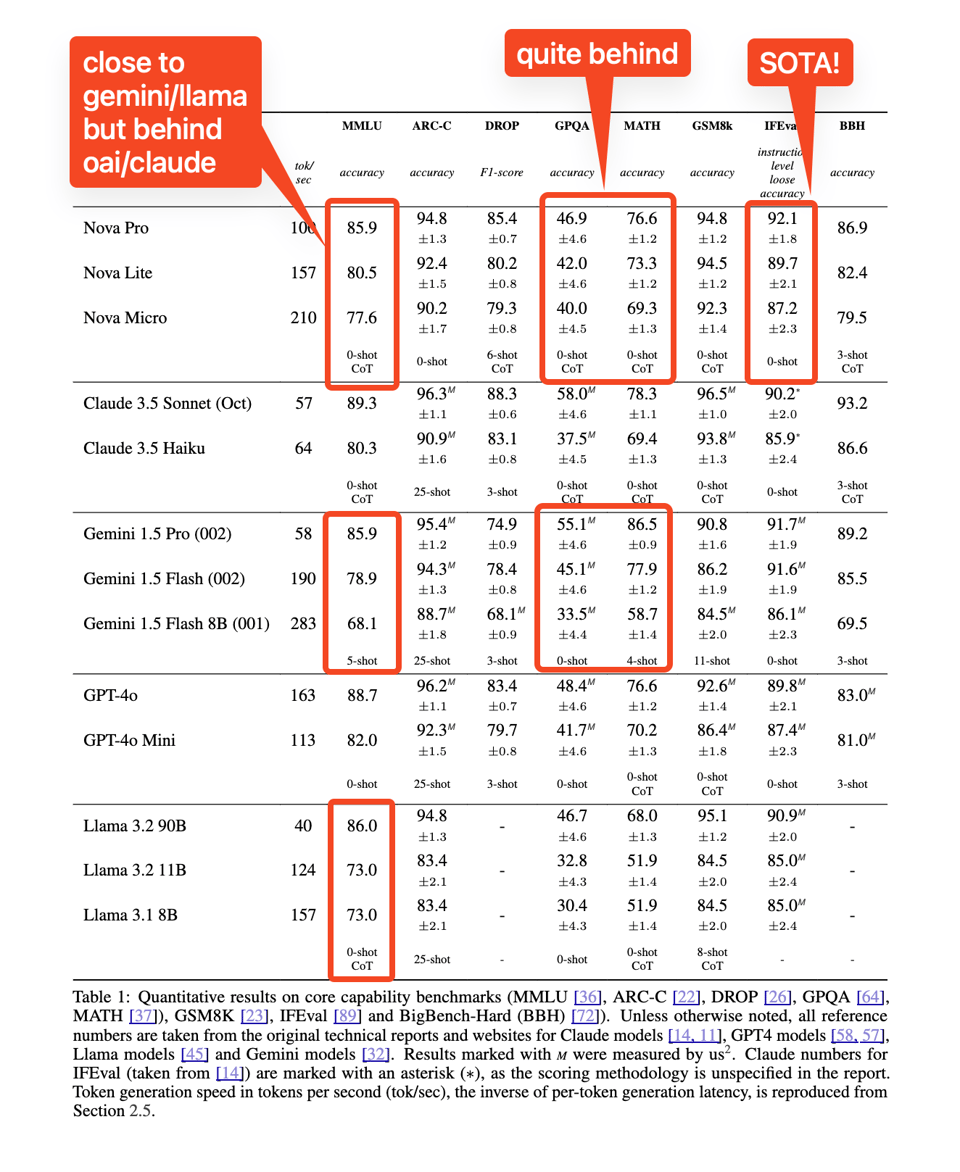

- Amazon unveils new foundation models with multimodal capabilities and competitive pricing.

- Users find Aider contemplative compared to other coding aids, enhancing coding efficiency.

- Troubleshooting issues with Repo-map led to community members suggesting ways to restore configurations.

These discussions indicate a keen interest in optimizing AI tools for coding workflows and addressing performance challenges.

OpenRouter & OpenAI Discussions

The Discord channels of OpenRouter and OpenAI were buzzing with discussions on various topics. OpenRouter provided updates on model removals, price reductions, and discounts. OpenAI discussions delved into Italy's AI regulation, ChatGPT feature issues, quantum computing in voting, content moderation challenges, and AI translation comparisons. Users shared insights on AI models, discussed user demands, challenges, and improvements in AI technologies, and shared tips for enhancing productivity and model performance. Both platforms offered valuable information and sparked engaging conversations among the community.

Insights on GPU Performance and New Image Synthesis Model

This section provides valuable insights on GPU performance and a discussion on a new image synthesis model. Users shared recommendations on starting with Stable Diffusion and emphasized the importance of knowing whether to run it locally or utilize cloud GPUs. There were discussions on recognizing and reporting scammers in the server. The conversation highlighted differences in GPU performance and the crucial factors of memory and speed. Users compared experiences with different models and pointed out that cheaper cloud GPU options may lead to better overall performance. Additionally, members discussed a new image synthesis model called Sana, noting its efficiency and quality compared to prior versions. Some users expressed skepticism about its commercial usage and suggested using Flux or previous models for everyday purposes.

GEMM Toolkit Updates, Runtime Error with Meta Tensors, GPU Mode Discussions

- GEMM Toolkit Updates generate curiosity: Users discuss the release of the 12.6.2 toolkit and its impact on prior methods used for GEMM.

- Runtime Error with Meta Tensors: Users seek help regarding runtime errors with specific functions involving meta tensors, leading to requests for debugging assistance.

- GPU Mode Discussions: Various discussions in different GPU modes cover topics like floating point representations in Triton, warp schedulers in GPU architecture, and training success with bf16, among others.

LLM Agents (Berkeley MOOC) Hackathon Announcements

Sierra AI hosts info session for developers

- An exclusive info session with Sierra, a leading conversational AI platform, is scheduled for 12/3 at 9am PT, accessible via livestream on YouTube. Participants will gain insights into Sierra’s capabilities and career opportunities.

- The session will cover topics such as Sierra’s Agent OS and Agent SDK, emphasizing lessons learned from deploying AI agents at scale.

Sierra AI looking for talent

- Sierra is seeking talented developers to join their team and will discuss exciting career opportunities during the info session. Interested parties are encouraged to RSVP to secure their spot for the livestream.

- “Don’t miss this chance to connect with Sierra and explore the future of AI agents!”

Links mentioned:

- Meet with Sierra AI: LLM Agents MOOC Hackathon Info Session · Luma: Meet with Sierra AI: LLM Agents MOOC Hackathon Info Session

- LLM Agents MOOC Hackathon - Sierra Information Session: no description found

Performance Optimization Discussions and New Models

- Latest updates for ADOPT optimizer made: The team integrated updates for the ADOPT optimizer into the Axolotl codebase, encouraging members to test it out. Members discussed the advantages of this optimizer.

- Optimal convergence with any beta value: The ADOPT optimizer can achieve optimal convergence with any beta value, enhancing performance in various scenarios. This feature was highlighted during recent discussions among members.

- Question about changing thread group/grid sizes: Members inquired about altering thread group/grid sizes during graph rewrite optimizations in uopgraph.py, questioning if sizes are fixed based on earlier searches.

- Clarification on graph optimization processes: Discussion focused on how uopgraph.py manages thread group sizes during optimization, with members seeking insights on making adjustments post-optimization and understanding how initial searches impact final sizes.

- Bio-ML Revolution in 2024: The year 2024 sees a surge in bio-ML, with achievements like Nobel prizes for structural biology prediction and advancements in protein sequencing models. Concerns exist around compute-optimal modeling curves.

- Introducing Gene Diffusion for Single-Cell Biology: The Gene Diffusion model, utilizing a continuous diffusion transformer on gene count data, offers insights into cell functional states using self-supervised learning.

- Seeking Clarity on Training Regime of Gene Diffusion: Members are curious about the training regime of the Gene Diffusion model, particularly its input/output relationship and predictive aims, highlighting the need for community assistance in grasping complex concepts.

FAQ

Q: What is the reason behind HuggingFace implementing a 500GB storage limit for free-tier accounts?

A: HuggingFace implemented the 500GB storage limit as a change from the previous unlimited storage to address existing limits and prevent misuse while still supporting valuable community contributions.

Q: What are some of the tools developed for Open-WebUI by the author mentioned?

A: The author developed tools like an arXiv Search tool, Hugging Face Image Generator, a Planner Agent using Monte Carlo Tree Search, and Multi Model Conversations supporting up to 5 different AI models within the AI stack.

Q: What were some of the discussions surrounding the performance of the GPT-4o compared to QwQ?

A: Users noted the performance limitations of the free GPT-4o, likely being the 4o-mini variant, in contrast to QwQ based on benchmark results.

Q: What sparked debates around Italy's announcement to potentially ban AI platforms like OpenAI?

A: Italy's plan to ban AI platforms like OpenAI unless users can request data removal sparked debates on the regulatory effectiveness in managing AI technologies.

Q: What are some of the discussions happening in the LLM Studio Discord channel related to AI models and tools?

A: Discussions include queries about Qwen LV 7B vision functionalities, the impact of FP8 quantization on model efficiency, support for Docker containers in HF spaces, skepticism around Intel Arc Battlemage cards for AI tasks, and performance issues of LM Studio on Windows systems.

Q: What topics were covered in the exclusive info session hosted by Sierra AI for developers?

A: The session covered Sierra's Agent OS and Agent SDK, highlighting lessons learned from deploying AI agents at scale and offering insights into Sierra's capabilities and career opportunities.

Q: What updates were integrated for the ADOPT optimizer, and how did members respond?

A: Latest updates were integrated for the ADOPT optimizer into the Axolotl codebase, with members encouraged to test it out and discussing the advantages of this optimizer achieving optimal convergence with any beta value.

Q: What revolution in bio-ML is predicted for 2024, and what concerns were raised?

A: A surge in bio-ML is predicted for 2024 with achievements like Nobel prizes for structural biology prediction and advancements in protein sequencing models, raising concerns around compute-optimal modeling curves.

Q: What is the Gene Diffusion model, and why is there a curiosity about its training regime?

A: The Gene Diffusion model utilizes continuous diffusion transformer on gene count data for insights into cell functional states using self-supervised learning. Members are curious about the training regime's input/output relationship and predictive aims, seeking community assistance in understanding the complex concepts.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!