[AINews] OpenAI Voice Mode Can See Now - After Gemini Does • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

Theme 4. Gemini series shines in Math Benchmarks, Growing Cognitive Reputation

Challenges and Optimizations in AI Model Development

Discussions on Various AI Tools and Platforms

Discussion on Various AI Platforms

Perplexity AI Sharing

Recent AI Optimizers and Model Enhancements

Hermes Model Benchmarks and Event Updates

GPU and Machine Learning Discussions

Innovations and Discussions in AI Community

Gorilla LLM (Berkeley Function Calling) and Axolotl AI Discussions

AI Twitter and Reddit Recap

The AI Twitter Recap provided insights into AI model releases, updates, infrastructure, development, industry updates, and humor from Twitter discussions. The AI Reddit Recap covered discussions from /r/LocalLlama, including topics like roleplaying and prompt handling excellence of Llama 3.3-70B, Microsoft's Phi-4 small language model specializing in complex reasoning, and the comparison between OpenAI o1 and Claude 3.5 Sonnet for a $20 tier subscription. Both recaps showcased a variety of key topics, trends, and discussions happening in the AI community on Twitter and Reddit.

Theme 4. Gemini series shines in Math Benchmarks, Growing Cognitive Reputation

Gemini series shines in Math Benchmarks and Cognitive Repuation

- Gemini and Qwen are highlighted for their exceptional performance on U-MATH, a new university-level math benchmark. Gemini is considered the greatest of all time (GOAT) in this context, while Qwen is recognized as the leading performer.

- Gemini's Performance: Gemini consistently outperforms other models across various benchmarks like U-MATH, LiveBench, and FrontierMath. Google's focus on math and science projects is believed to contribute to Gemini's success.

- Model Comparisons and Challenges: Gemini excels in performance compared to smaller models, but struggles with contextual cues. Qwen models face issues with understanding instructions, leading to hallucinations.

- Benchmark Details and Updates: U-MATH and μ-MATH benchmarks test LLMs at complex levels. Gemini Pro leads in abilities despite overall lower hallucination rates. The leaderboard and HuggingFace links offer more insights.

Challenges and Optimizations in AI Model Development

Discussions highlighted the challenges of model merging and quantization, emphasizing the importance of preserving model quality by advocating for full precision merging. The role of LoRA adapters in optimizing fine-tuning and VRAM usage for AI models was extensively discussed. Additionally, SPDL was presented as a method to accelerate AI model training through thread-based data loading, enhancing data management and throughput. The release of the OpenPlatypus dataset with recommendations for optimal dataset usage was outlined. In a separate community, anticipation for the 5090 GPU release, combating GPU scalpers, and recommendations for image generation models were shared. Further discussions focused on stability AI, performance comparisons of optimizers like Muon, NeurIPS prize controversies, and innovative attention mechanisms like Cog Attention. The LM Studio community discussed GPU grids, running large models on Mac devices, GPU comparisons, model safety considerations, and the effectiveness of different LLM strategies.

Discussions on Various AI Tools and Platforms

This section highlights the discussions on different AI tools and platforms within various Discord channels. Users engaged in conversations regarding performance issues, model comparisons, credit management concerns, user experiences, and feedback on tools like Windsurf, Codeium, and other AI platforms. They also shared their experiences with debugging tools like O1 Pro, the performance of Gemini Flash, challenges with DeepSeek, and the efficiency of different models for coding tasks. These discussions provide valuable insights into user perspectives and experiences with AI technologies in different contexts.

Discussion on Various AI Platforms

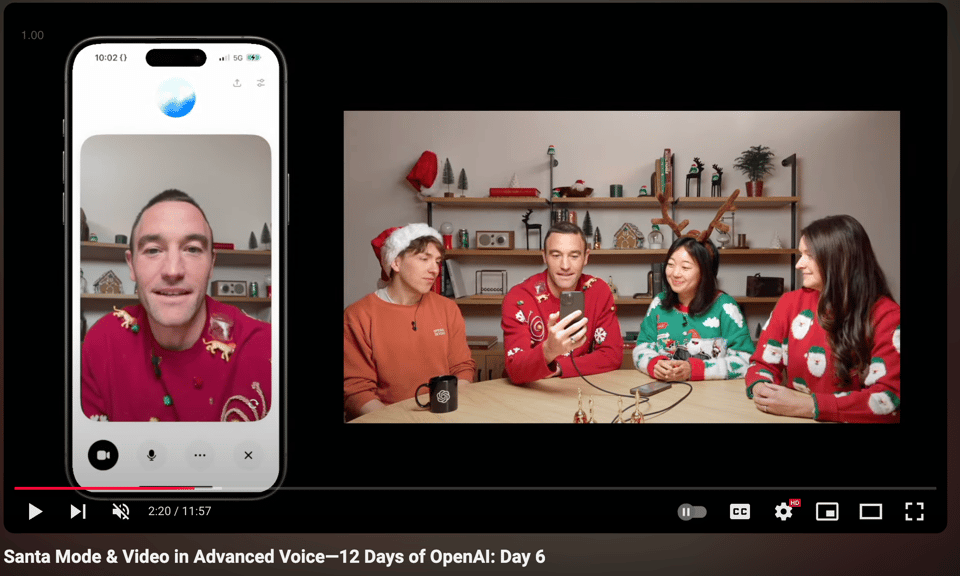

This section discusses the performance and critiques of different AI platforms. The challenges and praises for tools like DeepSeek, Devin AI, and OpenHands are highlighted. Users share feedback on installation issues and coding capabilities. The section also mentions updates on Santa mode in Advanced Voice by OpenAI, and the comparison between Gemini 2.0 and Claude. Additionally, users discuss frustrations with AI tools' performance, pricing, and hosting solutions. Conversations include lighthearted discussions on generational programming styles and progress in AI technology like image generation and voice AI. Issues like service outages, file formats for Custom GPT, and formatting evaluations are also explored in detail.

Perplexity AI Sharing

Six messages were shared in the Perplexity AI ▷ #sharing discussion. Members discussed various topics including issues with the B650E Taichi motherboard freezing, exploring Yong Yuan Niwan Cheng Sinaitoy, investigating GPR devices and methodologies, creative poetry requests, and crafting an advertisement for one million dollars. Each topic sparked discussions and engagements among participants, diving into solutions, implications, applications, and creative brainstorming sessions.

Recent AI Optimizers and Model Enhancements

Recent discussions highlighted advancements in AI optimizers and model enhancements. New optimizers like Muon were praised for their gradient orthogonalization and potential manifold capacity loss benefits. The introduction of Cog Attention with negative attention weights was seen as a way to boost model expressiveness by allowing token deletion, copying, or retention simultaneously. Challenges were identified with prepending auxiliary information during training and suggestions included exploring EM-style approaches or modifying input representations. Innovative softmax alternatives like using tanh transformations for normalization and a multivariate tanh version were proposed for improved model expressiveness. The dynamics of attention mechanisms were scrutinized, focusing on enforcing sparsity without losing information processing complexity.

Hermes Model Benchmarks and Event Updates

The section discusses benchmark expectations for Hermes 3B, Mistral 7B, and Qwen 2.5, event registration updates, insights on math benchmark evaluations, discussions on running models like Hermes, and exploring new model training methodologies. Users are interested in comparing benchmarks of different models, registration for upcoming events, new math benchmark datasets, tools for running models, and novel training ideas. The section also includes discussions on exploring beyond Mistral 7B, live streams for events, evolving evaluation techniques, running models using LM Studio, and pretraining smaller models using big model hidden states.

GPU and Machine Learning Discussions

This section delves into discussions related to GPUs, tensor cores, register access limitations, and machine learning topics. Members discussed the operations of GPU schedulers, tensor cores utilizing warp-level execution, and potential caveats with int8 and fp8 on newer architectures. Additionally, there were proposals to include limitations of register access and provide code snippets demonstrating efficient register access patterns. Furthermore, conversations revolved around performance evaluations for kernels using markdown blogs and exploring megaprompt strategies. In the context of ARC competitions, there were discussions on leveraging LLMs for solving riddles, strategies for test time training, and hopes for better compute resources. The section also covers Cohere support, system status updates, and interactions within the community. Moreover, there are insights into quantization techniques, Cohere Go SDK updates, model calibration datasets, and challenges faced by users accessing Cohere API. Lastly, upcoming MOOC announcements, hackathon submissions, event details, XR technology advancements, and community interactions are highlighted.

Innovations and Discussions in AI Community

AI Model Creativity Benchmarking Discussion:

- The community discussed tasks to measure LLM capabilities in 'creative' tasks, highlighting the lack of benchmarks for creativity and diversity.

- Users expressed discontent that their favorite, Claude-3, ranked lower in creative writing benchmarks.

Participatory Algorithmic Responsibility Terms:

- Appreciation for 'algorithmic decision-making systems' term in participatory algorithmic responsibility.

- Emphasizes user involvement in understanding algorithms' impact.

Claude Tackles Spam with AI Insights:

- Anthropic's Claude chatbot faced spam issue due to SEO manipulation of text generation.

- Raises concerns about manipulative tactics and platform integrity.

Exploring Tulu 3 Innovations:

- YouTube talk on 'Tulu 3: Exploring Frontiers in Open Language Model Post-Training.'

- Focus on RLHF and post-training techniques, insightful questions raised.

Calsoft launches CalPitch for Business Development:

- Tool assists business development team in researching prospects and drafting outreach emails.

- Demonstrates how AI can enhance and speed up workflows.

Build RAG agents with SharePoint Permissions:

- New feature respects SharePoint permissions, addressing Azure stack users' requests.

- Offers tailored experience with data in compliance with existing structures.

Google unveils Gemini 2.0 models with Day-0 support:

- Google's Gemini 2.0 models launched with day-0 support.

- Promises enhanced speed and capabilities, considered a game-changer in the AI landscape.

Discord reaching critical mass:

- Discord noted for reaching critical mass, reflecting platform's stability.

- Users show confidence and positive outlook on platform growth and operations.

Gorilla LLM (Berkeley Function Calling) and Axolotl AI Discussions

The section discusses various topics related to Gorilla LLM, including challenges faced in fine-tuning for custom APIs, downloading the GoEx model, and implementing reversibility strategies. Additionally, insights into PyTorch's tunable operations and their potential benefits for CUDA operations are shared. The Axolotl AI discussion revolves around the discovery of PyTorch's tunable operations and the optimization possibilities it offers for developers. The section also covers Mozilla AI announcements, highlighting the recent Mozilla Builders Demo Day and social media buzz surrounding the event.

FAQ

Q: What are some key highlights from the AI Twitter Recap and AI Reddit Recap?

A: The AI Twitter Recap provided insights into AI model releases, updates, infrastructure, development, industry updates, and humor from Twitter discussions. The AI Reddit Recap covered discussions from /r/LocalLlama, including topics like roleplaying and prompt handling excellence of Llama 3.3-70B, Microsoft's Phi-4 small language model, and the comparison between OpenAI o1 and Claude 3.5 Sonnet for a $20 tier subscription.

Q: What are some key points regarding Gemini's performance in math benchmarks?

A: Gemini and Qwen are highlighted for their exceptional performance on U-MATH, with Gemini considered the greatest of all time (GOAT) and Qwen recognized as the leading performer. Gemini consistently outperforms other models across various benchmarks like U-MATH, LiveBench, and FrontierMath. However, Gemini struggles with contextual cues while leading in abilities overall.

Q: What discussions were highlighted regarding AI model merging, quantization, and LoRA adapters?

A: Discussions highlighted the challenges of model merging and quantization, emphasizing the importance of preserving model quality by advocating for full precision merging. The role of LoRA adapters in optimizing fine-tuning and VRAM usage for AI models was extensively discussed.

Q: What were the key topics discussed in the LM Studio community regarding AI tools and platforms?

A: Users engaged in conversations regarding performance issues, model comparisons, credit management concerns, user experiences, and feedback on tools like Windsurf, Codeium, and other AI platforms. They also shared their experiences with debugging tools like O1 Pro, the performance of Gemini Flash, challenges with DeepSeek, and the efficiency of different models for coding tasks.

Q: What were some highlights from the discussions on AI model creativity benchmarking?

A: The community discussed tasks to measure LLM capabilities in 'creative' tasks, highlighting the lack of benchmarks for creativity and diversity. Users expressed discontent that Claude-3 ranked lower in creative writing benchmarks.

Q: What was the focus of the discussions regarding participatory algorithmic responsibility terms?

A: There was appreciation for the term 'algorithmic decision-making systems' in participatory algorithmic responsibility, emphasizing user involvement in understanding algorithms' impact.

Q: What insights were shared about Google's Gemini 2.0 models and their launch?

A: Google's Gemini 2.0 models launched with day-0 support, promising enhanced speed and capabilities, considered a game-changer in the AI landscape.

Q: What topics were discussed in relation to Discord reaching critical mass?

A: Discord was noted for reaching critical mass, reflecting the platform's stability. Users showed confidence and a positive outlook on platform growth and operations.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!